Space Makes Noise—And Here’s the Eerie Song an Eclipse Sings

Thought you could only experience the astronomical event with your eyes?

Out of silence, the bright and piercing tone of a clarinet sounds mezzoforte. Its pitch rises momentarily, then descends with no discernible rhythmic pattern, before giving way to the eerie, insistent blaring of a bassoon. The singular erratic melody continues, reaching lower and lower notes, until the sound suddenly evaporates into a succession of low clicks.

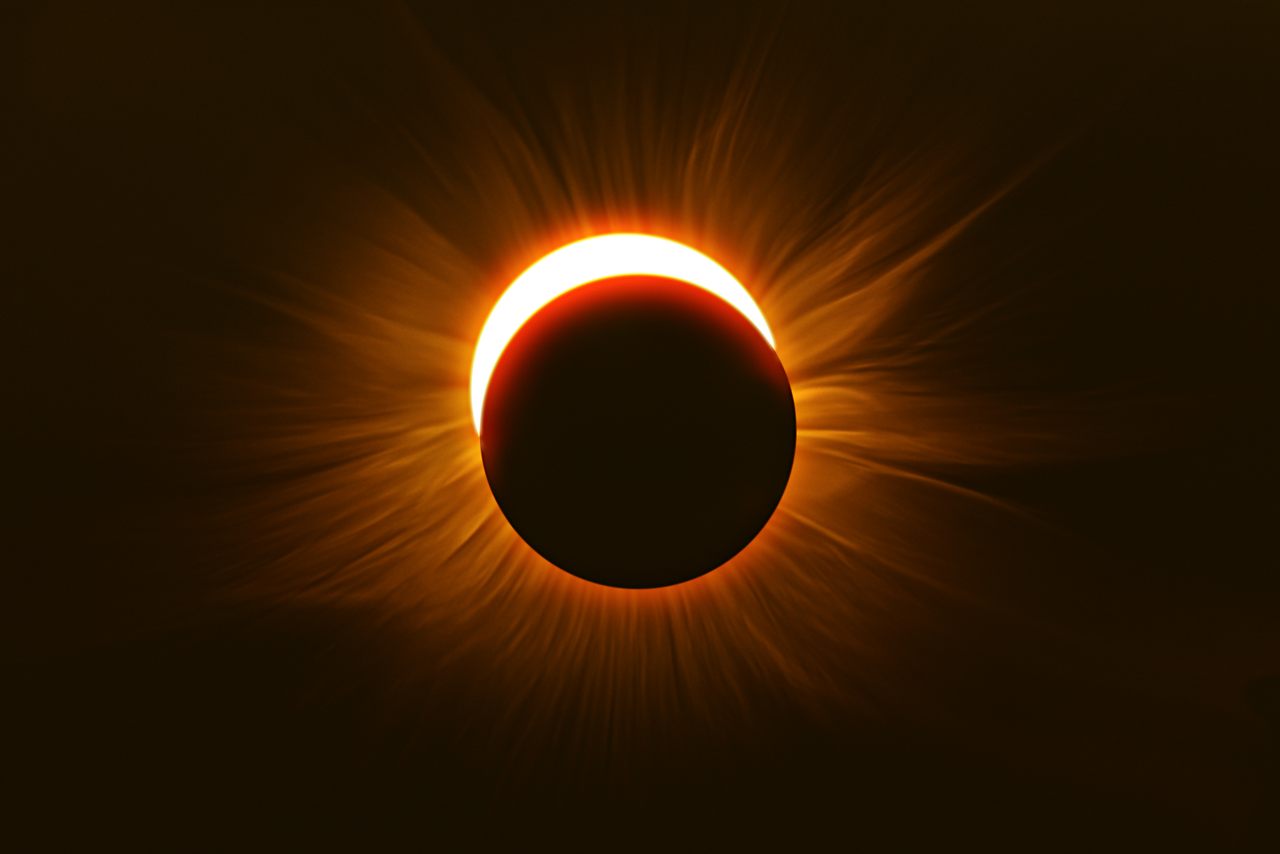

This music is not reverberating against the walls of a theater or emanating from under the door of a practice room, nor is it being played by the hands of a diligent musician. These erratic sounds are a computerized expression of light data, taken in real-time from a solar eclipse.

Sonification is the phenomenon of using non-verbal audio to translate and express data, as an alternative or supplement to visual displays. The earliest successful applications of sonification date back to the early twentieth century, and since then, sonified data has been utilized in an array of scientific, medical, geographical, and astronomical settings for both research and creative purposes.

The LightSound Project, an initiative started by a team of astronomers and engineers at the Center for Astrophysics | Harvard & Smithsonian in 2017, designed a handheld sonification device that senses the light changes that occur during an eclipse. As the moon passes over the sun, the device sonifies the shifts in light into flute, clarinet, and bassoon tones, as well as percussive clicks.

The correlation between sound and planetary bodies is by no means a recent discovery. Where the modern invention of sonification relies on hardware and software to translate a set of data into sound, astronomers and physicists discovered centuries ago that the sun, moon, and planets emit sonic frequencies all their own—they’re just imperceptible to the human ear.

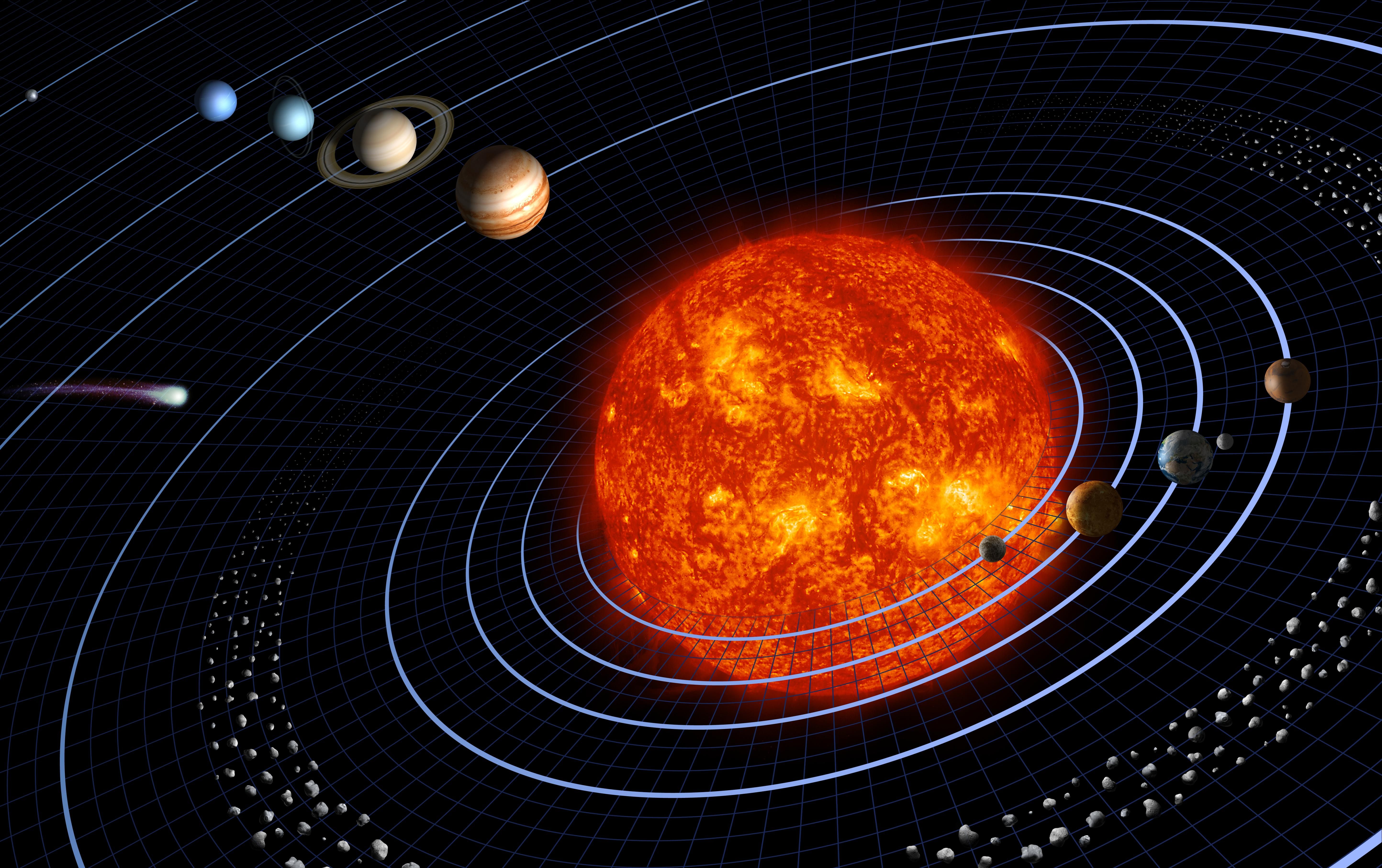

Musica universalis, also referred to as “music of the spheres” is a theory that was first cultivated in Ancient Greece, most often attributed to the philosopher Pythagoras. It proposed that the orbital revolution of each planetary body resonates with a tone or “hum,” that while inaudible, can be felt energetically or “heard” by the soul. The Greek philosopher Plato notably described astronomy and music as “sister sciences,” for their shared basis in harmonious motion.

In 1601, Johannes Kepler, a prolific German polymath, expanded on these ancient theories with his book Harmonice Mundi. In it, he sought to explore the nature of harmonic proportions in both music and planetary motion, ultimately deriving the equation for his third law of planetary motion and giving mathematical rationality to the previously metaphorical idea of music of the spheres. Kepler discovered that in calculating the difference between the minimum and maximum angular speeds of planets in neighboring orbits, a harmonic proportion is derived that approximates the harmonic ratio between two musical notes.

In his words, “the heavenly motions are nothing but a continuous song for several voices, to be perceived by intellect, not by the ear; a music which… sets landmarks in the immeasurable flow of time.” From his findings, Kepler resolved that the planetary bodies make up a celestial choir, regarding Saturn and Jupiter as bass, Mars as tenor, Venus and Earth as alto, and Mercury as soprano.

Even with the groundbreaking discoveries made by Kepler and his contemporaries which preceded modern astronomical research, the celestial-musical relationship remains enigmatic in its complexity. To conceptualize the topic more simply, master astrologer Rick Levine says that “everything is vibration in sympathetic resonance,” and that like all things our eyes perceive as solid, we think of planets as objects because they are vibrating at such an extraordinarily low frequency. But ultimately, they are—like music and all other matter—just particles and waves. What we perceive as sound, like speech and musical tones, vibrate at a frequency of hundreds of cycles per second.

While planetary resonance is more complex and therefore measured differently, the same principle of cycles per time frame applies. The further a planet is from the sun, the longer it takes to complete an orbital cycle. Earth, as we know, completes one cycle each year, where the moon completes 13 cycles per year. Saturn, for instance, completes one cycle every 29.4 years. These planetary vibrations, just like musical notes, resonate with a series of harmonic overtones, and preliminary research in quantum physics seeks to determine how the resonance of these harmonics impacts humans and other living beings on Earth.

Levine notes that “music affects us, because the frequencies and harmonies have an architectonic physical impact.” This is precisely why different genres of music provoke in us a range of emotional responses—the vibrations of the particles that make up our bodies are being altered in a way that is specific and reciprocal to the musical vibrations. Although the idea currently lives in the realm of metaphysical speculation, it is quite possible that the very same phenomenon is occurring on a macro, albeit subtler, scale between us and the planetary bodies.

For the LightSound Project, the auditory displays produced by sonification rely on coded software, in which a sound is assigned to every possible data value read from a signal. When it comes to the use of sonification in astronomical sciences, the signal being read is typically light intensity, measured in Lux (lx). An auditory output is then generated from the data, the tones and timbres of which are predetermined by the scientists who’ve coded the software.

Graduate student Sóley Hyman called on her expertise as a musician to select these instrumental sounds to express light brightness and intensity, determining through a process of trial and error that their timbres were the most agreeable to the human ear at the frequencies generated by the LightSound device. Higher flute tones are used to communicate very bright light, while clarinet and bassoon guide the ear as the light dims into darkness. When there is complete darkness, only a succession of simple clicks can be heard.

The original objective of the LightSound Project was to serve the Blind and Low Vision Community by developing a tool with which to experience a solar eclipse through sound, and that remains a primary focus of the initiative. However, these developments in sonification are proving to have significant implications for scientific research.

Hyman shares that the use of sonification in eclipses and other astronomical phenomena is not only raising awareness of sonification as a valuable research mechanism, while concurrently increasing accessibility in scientific fields, but it is also expanding analytical potentials. “Our ears may be more astute at picking up signals than our eyes,” she says, implying that with sonification, researchers may be better able to make new astronomical discoveries through hearing than with sight alone.

In the seven years since it began, the LightSound Project has grown substantially. Workshops that teach attendees how to build their own LightSound sonification devices are held throughout the year, and anyone can request a device—either fully assembled or in parts for self-assembly—at the project’s website. In anticipation of the total solar eclipse on April 8, the project’s team has received nearly 2,000 device requests, a demand they are working diligently to fill.

While many of these requests have come from members of the Blind and Low Vision community, a great number have been made by seeing individuals as well. LightSound Project astronomer Allyson Bieryla reminds that “eclipses are very emotional events” and that experiencing one visually, aurally, or both is often even “more impactful than expected.” This impact undoubtedly goes beyond just our senses of sight and hearing—though more challenging to quantify, all living beings seem to feel eclipses energetically as well. Sonification is just one of the many ways science is advancing to expand our understanding of the physical and emotional effects these astronomical events have on humankind.

We are quick to think of the cosmos visually. We gaze at the moon while taking care to not look directly at the sun, and focus our eyes through telescopes, eager to glimpse the planets. For centuries, eclipses have been regarded by cultures around the world as the visually captivating events that they are. But, as with most things in existence, there is more to these cosmological happenings than meets the eye, and the sun, moon, planets, and stars are inviting us to listen.

Follow us on Twitter to get the latest on the world's hidden wonders.

Like us on Facebook to get the latest on the world's hidden wonders.

Follow us on Twitter Like us on Facebook